Digital Signage Insider: News, Trends and Analysis

- Written by Bill Gerba

- Published: 30 September 2015

I'm going to call out Betteridge's Law of Headlines right away today -- I don't actually know the answer to the question I'm answering. And I'm not really sure if an article of this sort even makes sense to post on a blog purportedly focused on digital signage. But I do know that one of the major presuppositions about making digital signage content is that when you include a call to action, some people will actually take it. Measurably more, in fact, than when you don't give them a little nudge. Yet while it might be possible to show a causal link between, say, a banner ad or in-store campaign and the purchase of the advertised product, I'm starting to wonder how much of an effect a constant bombardment of messages -- marketing and otherwise -- really has, and whether or not it can compel us to take additional action (whether purchasing stuff or otherwise) by reducing the action's perceived difficulty. The answer to this has ramifications far beyond digital signage, retail marketing and even advertising in general -- when our smartphones are bleeping important external news from subscription services, our IoT and smart home devices are chiming in with important notices about physical events, and our fitness trackers and wearables are contributing a steady flow of personal information (that was always out there but we never paid attention to before), I have to imagine that at a certain point the sheer amount of data we process will become distracting. But will the cumulative flow of data distract to the point where no message gets processed and consequently no decisions are influenced? And if so, how near (or far) from that point are we already?

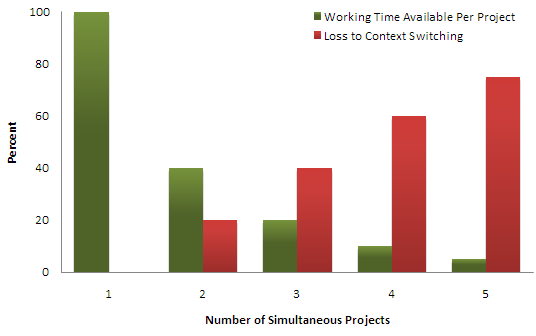

It has been well known for over a decade that human beings are pretty terrible at multitasking. As Jeff Atwood at Coding Horror notes, we may lose up to 20% of our cognitive performance when switching between just a handful of tasks (this is known as context switching, and is also a problem in computer science). We're constantly switching contexts, of course. As you walk through the grocery store, for example, you may be visually surveying a dozen different signs, product packages and even the faces of nearby shoppers as you mentally walk through your shopping list and pull up some coupons on your mobile phone. This works fairly well because a) other shoppers are generally doing the same thing, so to a certain extent you are able to mirror their behaviors, and b) the different behaviors all fall into the same mental bucket of "grocery shopping," so your context switches are rapid and cooperative. Of course, if you happen to be doing that while getting a text or posting to Facebook, all of those nice mental optimizations go out the window, which is why you now also frequently see people colliding with each other or stopped dead in the middle of an aisle at a grocery store.

Multitasking and context switching has become such a problem that by some estimates the US economy loses nearly $1 trillion a year due to the downtime associated with switching between tasks. Intel estimates that the problem costs their firm alone upwards of $1 billion. So clearly, being constantly interrupted, reminded and updated is not always a good thing, especially when your measure is overall productivity.

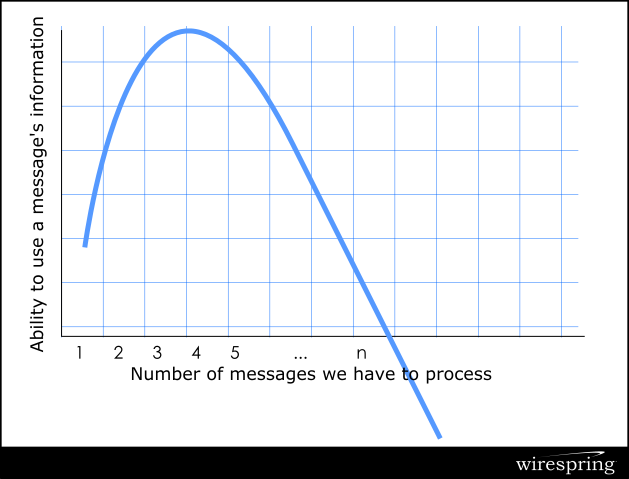

But where do things like the Twitter Buy button, Amazon Dash buttons, FitBits and Amazon's Echo IoT hub come into play? All of these devices make it easy to track our lives down to minutae and make snap decisions on everything from product purchases to meal plans to taking the stairs instead of the elevator. Do these little nudges affect our mental context the same way as "big" task switches? I haven't see any formal research on the subject yet, but I'm willing to bet that at some point, adding more messages becomes a cognitive problem, creating a overall net loss in terms of what we're able to process and act on.

We know that some psychological tenets hold true over large populations. For example, most people can hold 7 (+/- 2) items in memory at once. I'd bet that there will be some similar "law of distractions" (if there isn't already) that holds that without training, most people will only be able to handle a small number of new messages at once before each additional message starts to incur mental penalties that cancels out whatever benefit there might have been to sending the message in the first place.

Information overload is a real thing, with measurably bad consequences. For the most part, digital signs have avoided the role of adding too many messages because they generally focus on context-specific content (that old addage of delivering the right message to the right audience at the right time, blah, blah blah). But with the IoT that's no longer true. A student trying to focus on a lecture will face a context switch when his fitness tracker reminds him to get up and move. A corporate executive will have to switch tasks when an "urgent" message is sent to his phone letting him know a delivery was left at the front door. And who knows what's going to happen when my smart hub starts telling me that I'm almost out of milk and a dozen other things in the fridge, and would I like to place an order now???

We're going to have to adjust our situational awareness if we are to have any chance of using most of these new messages rather than letting them wash over us like noise. So far, research suggests that this is going to be hard -- maybe impossible -- for us to ever do well. But if manufacturers continue adding new and smarter devices and service providers continue to come with new and more clever ways to streamline our choices and nudge us into making the "right" one, I have to hope that somebody is looking into this problem and thinking of ways to solve it.

- Written by Bill Gerba

- Published: 24 August 2015

Retailers were arguably some of the first power-users of what we now call Big Data -- information pulled from a variety of sources and crunched together to make predictions and decisions. In fact, I think Wal-Mart (that's how they spelled it at the time) was one of the earliest users of the term "data warehouse" because they were generating such a staggering amount of information, and collecting it all in a single place for analysis.

Today you don't have to be the biggest retailer in the world to be able to put together a Big Data plan. A huge amount of useful information can be pulled for almost nothing thanks to for-hire marketing analytics providers, government website APIs for pulling socioeconomic, ethnographic and geographic information, and of course social networks like Twitter and Facebook who have access to (and often republish) the public (and sometimes private) information of billions of individuals around the world.

While thinking about this, I came across a nice infographic from Data Science Central which attempts to outline some of the benefits that Big Data analysis can confer on participating retailers. When I first looked over the graphic, one part (where they posit that retailers have transitioned from "making transactions" to "formating relationships" with customers struck me. My initial reaction was "ah, typical Big Data hyperbole." But after considering it more, it seemed more true. Today the majority of retailers (online and off) that I frequent "know" a lot about me. They're -- at times -- uncannily good at predicting what I'm shopping for, what I need, and what I simply want. And yes, the "relationship" between us is extremely superficial, but it's leaps and bounds ahead of what it would have been shopping in a big retail store or discount club 15 or 20 years ago. In a nutshell: it's useful.

I've clipped only a small part of the infographic below. The whole thing is quite a bit larger and available on the Data Science Central link above, and is certainly worth a few minutes of contemplation.

- Written by Bill Gerba

- Published: 14 August 2015

For those folks wondering if or when we were planning to re-start our email newsletters, the answer is: Real Soon Now™. We've had as many as 25,000 subscribers over the years, and cleaning out those who have left the industry, are no longer interested, or, in a few cases, died, has been more challenging than I expected.

We plan to send out subscription notice emails to everyone next week, and resume semi-regular blog posts about all things digital signage shortly afterward, starting with more content-related stuff, since that's my jam (I'm also going to start using more date-able slang so that these posts automagically become more cringeworthy over time).

- Written by Bill Gerba

- Published: 17 August 2015

Once upon a time it was fashionable to produce specialized POP displays that used a short-throw projector to light up a roughly human-shaped piece of 3M Vikuity film. When a recorded image of a person was displayed, it kinda, sorta looked like a live person talking, if you squinted and cocked your head just the right way. These "virtual mannequins" never really took off in a big way, probably because they were expensive, took up a fair amount of floor space, and, once you got past the novelty, looked terrible. To wit:

However a new approach using a ridiculously impractical (for now) 216 projectors to simulate a 3d object on a flat screen. By recording the initial subject using an array of cameras instead of just one, and then using a computer to crunch the resulting data down into small slices, each projectors can display the light for just a small portion of the subject at a specific viewing angle. The result is a compelling and convincing 3D-looking display on a 2D surface:

c109-f109 3005-a77-rep video-v2 from ACM SIGGRAPH on Vimeo.

Given the cost and complexity of putting together the initial footage, and the massive amount of floor space that the display device takes up, I think that practical applications for the technology are currently somewhat limited. An article over at Gizmodo suggests that museums and other educational contexts might benefit the most, especially in cases where adding a human touch would be beneficial, but it might be too expensive to keep actual humans on staff. The summary article from the researchers at USC suggests similar applications.

I sometimes think, though, that these researchers work on these projects simply because they can and they're awesome.

Subscribe to the Digital Signage Insider RSS feed

Subscribe to the Digital Signage Insider RSS feed