In search of an affordable and scalable testing method

We wanted an approach that was practical, scalable and objective, and that could yield real, actionable results. It would also have to be a system that worked with the creative process, and that could be repeated over and over as content development tasks went on. Content creation gets a lot of lip service in our industry. But as we began our research in this vein, it quickly became apparent that there was very little attention (and even less research effort) really being given to it. With little more than a vague goal of "figuring out how to make digital signage better," we stated our challenge: come up with a better, faster and cheaper way to conduct research on the effectiveness of digital signage content.

It's not that there aren't ways to do content research right now. In fact, I've written a couple of articles in the past about how content creators can study their work from a viewer's perspective, and make simple tweaks to ensure that the content works on-screen. The problem is that those techniques can only go so far, and the other options available right now simply aren't right for most companies in this space. There are a few high-end research companies and services like DS-IQ that can help companies make decisions, but they require a lot of up-front integration, and are simply out of reach for most agencies and clients. Real-world testing is frequently a logistical nightmare. Making and testing content in a trial-and-error fashion is expensive, time consuming, and depending on your host venues, may not be possible at all. As one customer succinctly put it: "By the time you've figured out what you really need to test, you've already blown your budget."

Amazon Mechanical Turk is a trademark of Amazon.com, Inc.

or its affiliates in the United States and/or other countries.

or its affiliates in the United States and/or other countries.

- A low-cost solution that would let us first test several thousand control cases, so we could establish baseline accuracy and precision measures.

- Something that could be easily modified to run different kinds of tests.

- An approach that could be set up and dismantled quickly, without needing to schedule something months or weeks in advance.

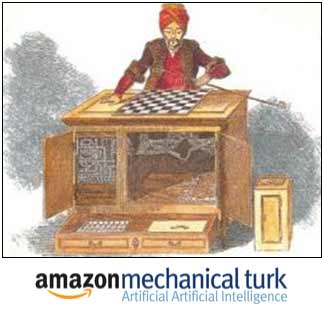

Enter Amazon's Mechanical Turk

Amazon Mechanical Turk is a web portal where "Requesters" can set up simple jobs that tens of thousands of "Workers" around the world can choose to do. For each task they complete, Workers earn a small reward, usually a few cents. The system is ideally suited to jobs that are hard for a computer to do, but easy for a person to do -- for example, looking at a photo to see if there's a red car, a man with a tattoo... or what's on a digital sign.

We wanted to use the system to ask workers about digital signage images, our thought being that we could show images of just content, or content on screens, or content on screens in retail, office, hotel or restaurant environments, and then ask the Workers questions about what they saw. Because Mechanical Turk is so inexpensive compared to traditional polling techniques, we figured we could do it a couple of thousand times, check our results, and do it a couple thousand times more just to be certain.

Unfortunately, the Mechanical Turk environment doesn't offer a lot of the tools we needed to run the kinds of tests we wanted to do. And we knew going in that no computer simulation would ever be a perfect substitute for real-world testing. So, we came up with a list of questions that we wanted to solve before moving forward with the research:

- How can we control the Worker's "environment"?

- Do we need to simulate the venue as well as the content?

- Do we need to test with video/moving images, or can we use stills?

- How can we test whether the results are accurate?

- How big must a sample be to be significant?

- What kinds of things can we definitely not test this way?

- Are there hidden costs?

After a few weeks of kicking ideas around and talking to researchers in similar fields, we eventually settled on a list of variables, a set of control experiments, and a framework for conducting our tests inside of Mechanical Turk. For the first batch of tests, we had several variables that we were interested in looking at. We started with the low-hanging fruit: common questions in the industry, all of which are expensive to test in the real-world using current techniques:

- Color and contrast combinations

- Number of words present on screen

- Brightness of screen environment

- Screen orientation (portrait/landscape)

- Amount of visual "clutter" in environment

- Distraction effect of people near the screen

- Size of screen relative to active attention area

Analyzing the results

Whew. With such a lengthy lead-in, we'll have to save the more interesting stuff -- like setting up the tests, analyzing the results, and finally answering some of the "big" questions -- for next week. I guarantee you'll learn something you didn't know before. An example? Well, let's just say that if you were planning to deploy your next batch of screens in portrait orientation so that they'd be more eye-catching, you might want to reconsider. Why? Well, go read up on what we've previously written about the active attention zone, and then tune in to next week's blog article!

Subscribe to the Digital Signage Insider RSS feed

Subscribe to the Digital Signage Insider RSS feed